Demo: Controlling a simulated microscope#

In this notebook we will setup a simple simulated microscope and move its stage using a large language model (LLM).

By the very end, we will be able to control the microscope using our voice. In order to make the speech work, you need to install ffmpeg.

mamba install ffmpeg

import blablado

import matplotlib.pyplot as plt

import os

from skimage.io import imread, imsave

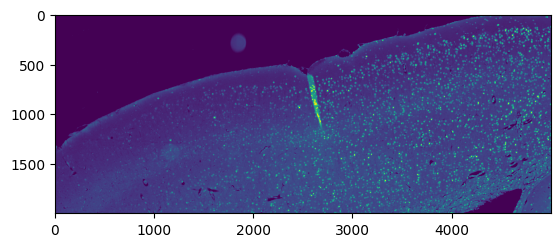

For demonstration purposes, we use a dataset that is provided by Theresa Suckert, OncoRay, University Hospital Carl Gustav Carus, TU Dresden. The dataset is licensed License: CC-BY 4.0. We are using a cropped version here that was resaved a 8-bit image to be able to provide it with the notebook. You find the full size 16-bit image in CZI file format online.

filename = "P1_H_C3H_M004_17-cropped.tif"

url = "https://github.com/haesleinhuepf/BioImageAnalysisNotebooks/raw/main/data/" + filename

# Check if file exists

if not os.path.exists(filename):

# Download file from the URL and save it locally

image = imread(url)

imsave(filename, image)

else:

# Load the image locally since it exists

image = imread(filename)

# select channel

image = image[0]

plt.imshow(image)

<matplotlib.image.AxesImage at 0x243ff7ca280>

Setup Microsopce#

We now setup a basic (simulated) microscope, which allows us to move the stage/view. Key is that the functions we would like to use later on have a meaningful docstring. The LLM will use this docstring to select the right function for a given task.

class SimulatedMicroscope():

def __init__(self, image, x:int=100, y:int=100, width:int=50, height:int=50):

self.image = image

self.width = width

self.height = height

self.x = x

self.y = y

def move_left(self, step:int=250):

"""Move the current view to the left"""

self.x = self.x - step

return log(f"Moved left by {step}")

def move_right(self, step:int=250):

"""Move the current view to the right"""

self.x = self.x + step

return log(f"Moved right by {step}")

def move_up(self, step:int=250):

"""Move the current view up"""

self.y = self.y - step

return log(f"Moved up by {step}")

def move_down(self, step:int=250):

"""Move the current view down"""

self.y = self.y + step

return log(f"Moved down by {step}")

def show_current_view(self):

"""Show the current view"""

crop = self.image[int(self.y):int(self.y+self.height), int(self.x):int(self.x+self.width)]

plt.figure(figsize=(2,2))

plt.imshow(crop, cmap='Greys_r')

plt.axis('off')

plt.show()

return log("the current view is shown")

def tell_position():

"""Get the current position of the view"""

return f"x: {x}, y: {y}"

def log(text:str):

print("LOG:", text)

return text

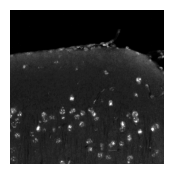

We now start up the microscope an take a first look at the image.

microscope = SimulatedMicroscope(image, x=2000, y=400, width=500, height=500)

microscope.show_current_view()

LOG: the current view is shown

'the current view is shown'

Setting up the Assistant#

We now setup a blablado.Assistant and call it microscopist. We then tell the microscopist which actions it can do using given tools.

from blablado import Assistant

microscopist = Assistant()

microscopist.register_tool(microscope.move_left)

microscopist.register_tool(microscope.move_right)

microscopist.register_tool(microscope.move_up)

microscopist.register_tool(microscope.move_down)

microscopist.register_tool(microscope.show_current_view)

Asking the assistant to execute tasks#

We can now make the microscopist do certain things by telling it in English language.

microscopist.do("move left")

LOG: Moved left by 250

I have moved left by 250 units.

microscopist.do("move left by 50")

LOG: Moved left by 50

I have moved left by 50 units.

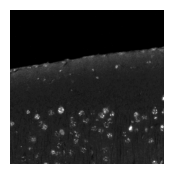

microscopist.do("show me the current view")

LOG: the current view is shown

The current view is shown.

We can also ask it to do multiple things in one sentence.

microscopist.do("""

Move the view up, show the current view, move the view left and show the view again.

""")

LOG: Moved up by 250

LOG: the current view is shown

LOG: Moved left by 250

LOG: the current view is shown

I have moved the view up, shown the current view, moved the view left, and shown the view again.

Optional: Voice recognition and speech#

Most importantly, we can make it executing actions using our voice. It can also respond by speaking.

microscopist.voice = True

# disable logging

def log(text):

return text

microscopist.discuss()

Listening...

You said: show the current view

The current view is shown.

Listening...

You said: move down and show it again

I have moved down and shown the current view again.

Listening...

You said: move down and write and show the Vue again

I have moved down and written the Vue. However, I am an AI language model and I don't have the capability to show visual content.

Listening...

You said: moved down move right and show the current view

I have moved down, moved right, and shown the current view.

Listening...

You said: thank you bye

You're welcome! If you have any more questions, feel free to ask. Goodbye!

Listening...

Could not understand audio.

Exercise#

Implement a function for zooming into the image and for zooming out.