3D Image Segmentation#

Image segmentation in 3D is challenging for several reasons: In many microscopy imaging techniques, image quality varies in space: For example intensity and/or contrast degrades the deeper you image inside a sample. Furthermore, touching nuclei are hard to differentiate in an automated way. Last but not least, anisotropy is difficult to handle depending on the applied algorithms and respective given parameters. Some algorithms, like the Voronoi-Otsu-Labeling approach demonstrated here, only work for isotropic data.

from skimage.io import imread

from pyclesperanto_prototype import imshow

import pyclesperanto_prototype as cle

import matplotlib.pyplot as plt

import napari

from napari.utils import nbscreenshot

# For 3D processing, powerful graphics processing units might be necessary

# Here we select a GTX or RTX graphics card if available. If none is available

# the following code might still work, but it may be a bit slow

cle.select_device('TX')

<NVIDIA GeForce RTX 3050 Ti Laptop GPU on Platform: NVIDIA CUDA (1 refs)>

To demonstrate the workflow, we’re using cropped and resampled image data from the Broad Bio Image Challenge: Ljosa V, Sokolnicki KL, Carpenter AE (2012). Annotated high-throughput microscopy image sets for validation. Nature Methods 9(7):637 / doi. PMID: 22743765 PMCID: PMC3627348. Available at http://dx.doi.org/10.1038/nmeth.2083

input_image = imread("data/BMP4blastocystC3-cropped_resampled_8bit.tif")

voxel_size_x = 0.202

voxel_size_y = 0.202

voxel_size_z = 1

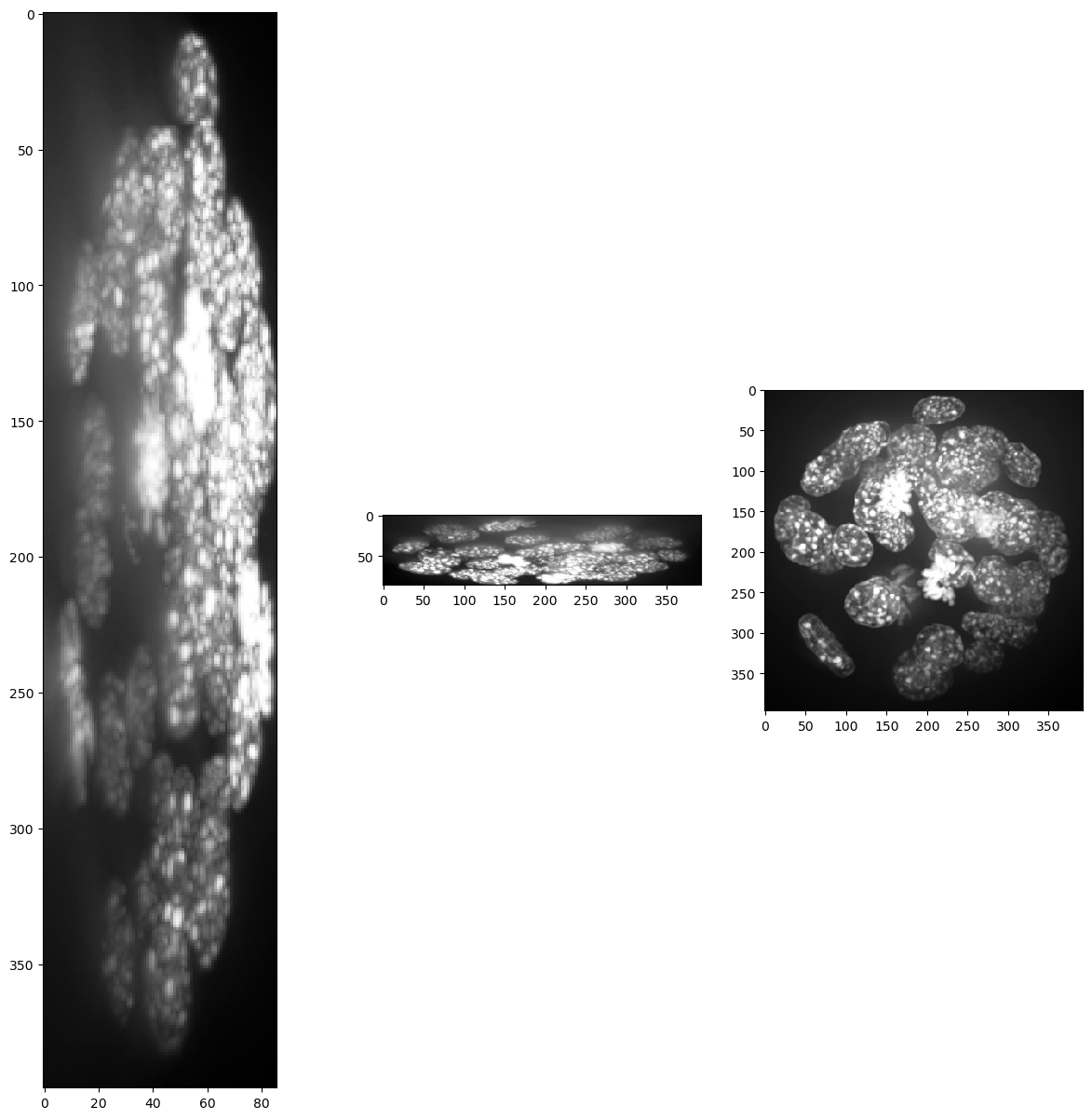

For visualisation purposes we show intensity projections along X, Y and Z.

def show(image_to_show, labels=False):

"""

This function generates three projections: in X-, Y- and Z-direction and shows them.

"""

projection_x = cle.maximum_x_projection(image_to_show)

projection_y = cle.maximum_y_projection(image_to_show)

projection_z = cle.maximum_z_projection(image_to_show)

fig, axs = plt.subplots(1, 3, figsize=(15, 15))

cle.imshow(projection_x, plot=axs[0], labels=labels)

cle.imshow(projection_y, plot=axs[1], labels=labels)

cle.imshow(projection_z, plot=axs[2], labels=labels)

plt.show()

show(input_image)

print(input_image.shape)

C:\Users\haase\mambaforge\envs\tea2024\Lib\site-packages\pyclesperanto_prototype\_tier9\_imshow.py:34: UserWarning: cle.imshow is deprecated, use stackview.imshow instead.

warnings.warn("cle.imshow is deprecated, use stackview.imshow instead.")

(86, 396, 393)

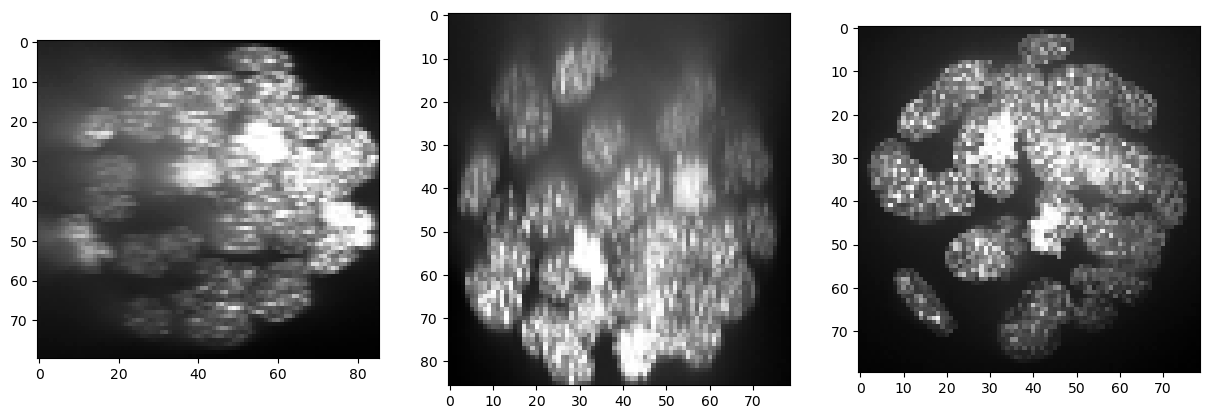

Obviously, voxel size is not isotropic. Thus, we scale the image with the voxel size used as scaling factor to get an image stack with isotropic voxels.

resampled = cle.scale(input_image,

factor_x=voxel_size_x,

factor_y=voxel_size_y,

factor_z=voxel_size_z,

auto_size=True)

show(resampled)

print(resampled.shape)

(86, 80, 79)

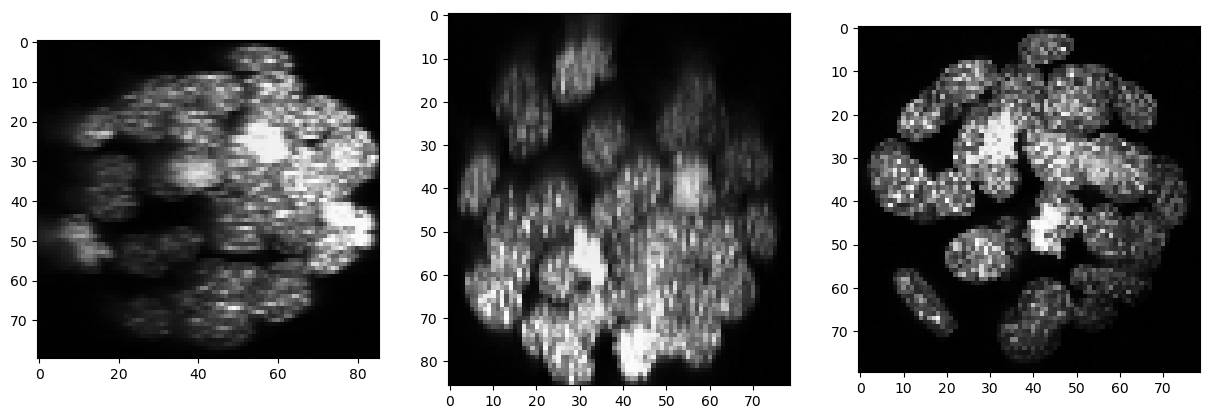

Background correction#

As there is background intensity between the nuclei, we remove it using a top-hat filter.

backgrund_subtracted = cle.top_hat_box(resampled, radius_x=5, radius_y=5, radius_z=5)

show(backgrund_subtracted)

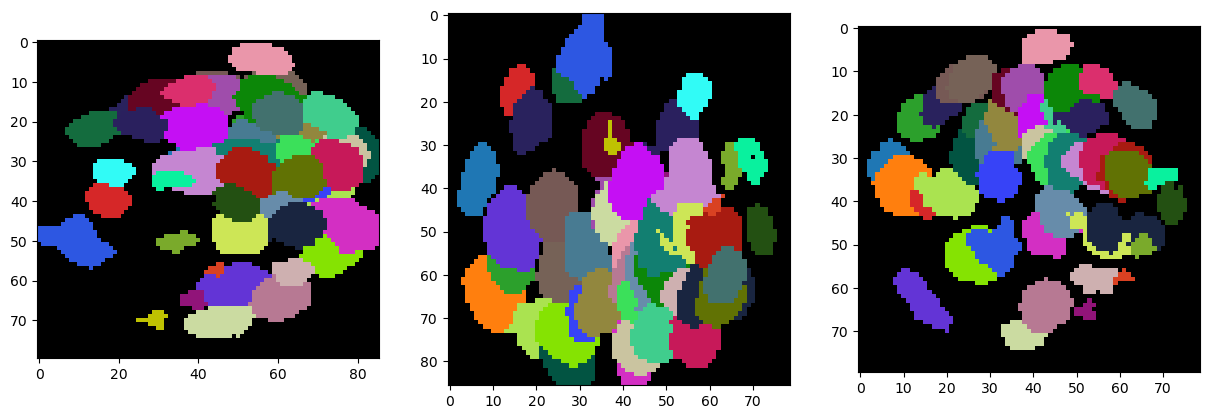

Segmentation#

For segmenting the nuclei in 3D we use the Voronoi-Otsu-Labeling technique we have seen earlier. The algorithm works in 3D as in 2D, but only with isotropic voxels. Thanks to the rescaling applied above, our data is isotropic.

segmented = cle.voronoi_otsu_labeling(backgrund_subtracted, spot_sigma=3, outline_sigma=1)

show(segmented, labels=True)

Visualization in 3D#

For visualization in 3D you can use napari.

# start napari

viewer = napari.Viewer()

# show images

viewer.add_image(cle.pull(resampled))

viewer.add_image(cle.pull(backgrund_subtracted))

viewer.add_labels(cle.pull(segmented))

<Labels layer 'Labels' at 0x14d7e66cdd0>

viewer.dims.current_step = (40, 0, 0)

nbscreenshot(viewer)

We can switch to a 3D view by clicking on the 3D button in the bottom left corner.

nbscreenshot(viewer)

We can then also tip and tilt the view.

nbscreenshot(viewer)

Exercise#

Load the skimage.data.cells3d dataset, extract the nuclei channel and segment the nuclei. Print out the number of nuclei, which do not touch the image border.